Using AI to create images

Text-to-image models have been available for a while now - DALL-E and Midjourney were released over 3 years ago and Stable Diffusion (SD) has been available for over 2 years now. None of these have been quite hitting the mark, however, with Midjourney producing the best results but being paid, proprietary and for the longest time only available via a Discord bot, DALL-E being proprietary and both DALL-E and Stable Diffusion producing images that have the classic sheen to them that makes it obvious you’re looking at an AI-generated image. Out of these options, SD, despite producing images that can only be described as meh, has been the most exciting for me, as it’s the only one that can be run locally, on your own hardware, without internet.

In August 2024, Flux family of text-to-image models by Black Forest Labs became one of the latest entrants to hit the metaphorical market shelves and it’s been making waves ever since. Flux models come in 4 variants: Schnell, Dev, Pro and Ultra, with the former two being available for free, while the latter two are only available via API or partners. Schnell is the smallest and fastest, but also the least accurate, while Ultra is the largest and most accurate.

Since offline models is what I’m particularly interested in, I’ve been playing with flux.1 [dev] and in this post I’ll go over how I got it running on my laptop and some of the results I’ve gotten.

Getting started with Flux

Before going over the installation process, it should be mentioned that you’re going to need a fairly beefy computer if you intend to run flux.1 [dev] locally. I’m running it on a MacBook Pro 14” M3 Max with 64GB of unified memory and it’s not exactly blistering fast on this beast of a machine. You’re also going to need plenty of storage, as AI models take up a decent amount of space.

The easiest way to get flux.1 running locally is to install a standalone GUI app that supports it, for example Draw Things or DiffusionBee. Fine option for one-off use, but for more deliberate workflows, the apps are very limiting, so a more appropriate tool is in order. One such tool is ComfyUI.

ComfyUI

ComfyUI is a node-based workflow editor GUI that can be used to generate AI images, videos and audio. To install ComfyUI, you’re going to need to have PyTorch installed on your machine: for Mac users, you can follow Apple instructions. My personal preference is to use a separate virtual environment for each Python project, so before installing PyTorch, I created a new virtual environment and activated it conda create --name comfy python=3.12 && conda activate comfy. With PyTorch installed, you can now install ComfyUI by cloning the repository git clone https://github.com/comfyanonymous/ComfyUI.git && cd ComfyUI and then installing the dependencies pip install -r requirements.txt.

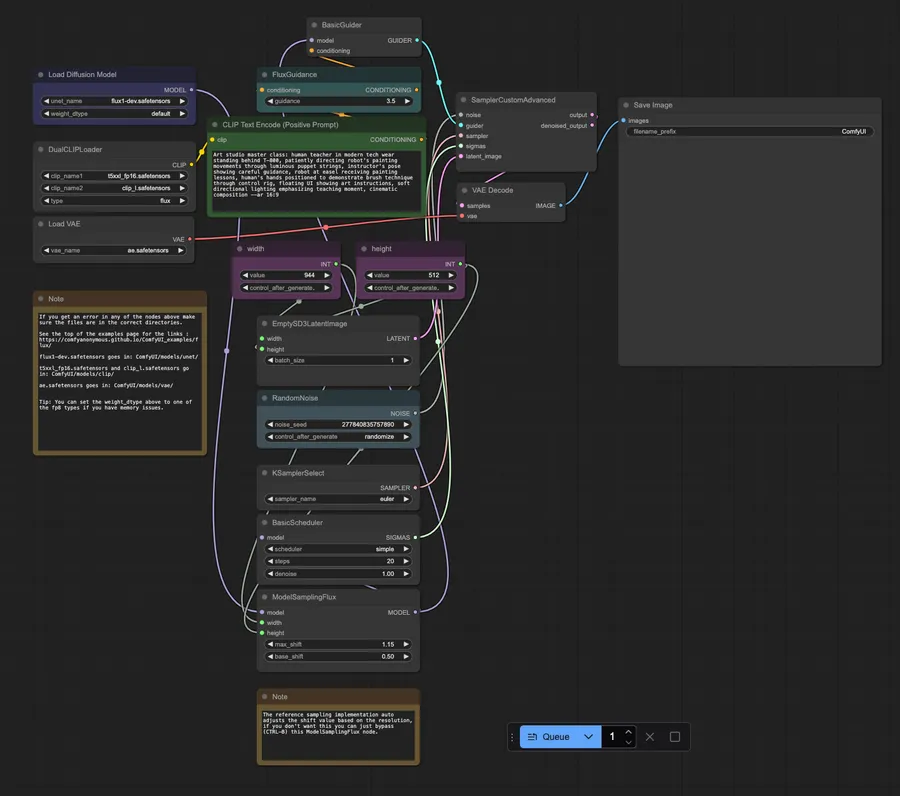

Finally, run the app with python main.py. On first launch, we are presented with a new empty workflow:

Ok great, how does one even get started with this? If you’re already familiar

with node-based workflow editors and you understand how Flux works, you can

probably figure it out on your own. If you’re not familiar with either,

comfyanonymous has a

bunch of examples of Comfy workflows. This post borrows heavily from those

examples.

Even with examples, building workflows can be rather tedious and

time-consuming but lucky for us, you can drag and drop any image or video

created with ComfyUI into the workflow editor and it will automatically copy the

workflow that was used to create the original.

Try it with this image! You can also try it with the same image from the header of this post: it will not work, since the header image is optimized.

Your workflow area should now look like this:

As you can see, the workflow even contains the original prompt that was used

to generate the image!

Now that we have the workflow figured out (or more like

copied from someone else, thank you comfyanonymous), we can start generating

images. Except, we can’t, since we don’t have the flux model downloaded. Well,

actually, we’re going to need a bit more than just the model weights. Without

getting too deep into the theory realm, we are going to need the model weights,

two CLIPs, and a VAE. Head over to the FLUX.1-dev HuggingFace

page and download the

files: model

weights

and

VAE.

You’re also going to need two CLIPs, which you can grab from

here

and

here.

With the files downloaded, place the model weights flux1-dev.safetensors in

ComfyUI/models/unet, the VAE ae.safetensors in ComfyUI/models/vae and the

CLIPs in ComfyUI/models/clip. We’re all ready to rock’n’roll!

Back in the ComfyUI app, enter the prompt into the positive prompt box and hit queue. With

the dev model, a 1024x1024 pixel image generation takes anywhere from 2 minutes

on the fastest MacBooks to 10 minutes or more on more budget-friendly machines.

Results

Now that we have the model running, let’s take a look at some of the images I’ve generated accompanied by the prompt used. To put the results into perspective, I also generated images with DALLE-2, DALLE-3 and Stable Diffusion Large Turbo 3.5. As a disclaimer, the exact same prompt was used for all models, which is not ideal, as different models prefer different styles of prompts. At the same time, it’s only fair that the prompt is given in plain English instead of having to learn the model’s language.

Exhibit A

First image is a side profile of a bull with a luminous orange butterfly. For this example, I have created the same image with Flux, DALL-E 2 and 3 and Stable Diffusion Large Turbo 3.5.

flux.1-dev image: A side profile of a black bull’s head with an orange light shining on it from behind. The bull is looking up at a detailed luminous orange butterfly. The background is deep black.

DALL-E 2 image: A side profile of a black bull’s head with an orange light shining on it from behind. The bull is looking up at a detailed luminous orange butterfly. The background is deep black.

DALL-E 3 image: A side profile of a black bull’s head with an orange light shining on it from behind. The bull is looking up at a detailed luminous orange butterfly. The background is deep black.

Stable Diffusion Large Turbo 3.5 image: A side profile of a black bull’s head with an orange light shining on it from behind. The bull is looking up at a detailed luminous orange butterfly. The background is deep black.

The winner is clear here: flux.1-dev produces a much more detailed and realistic image than the other models.

Exhibit B

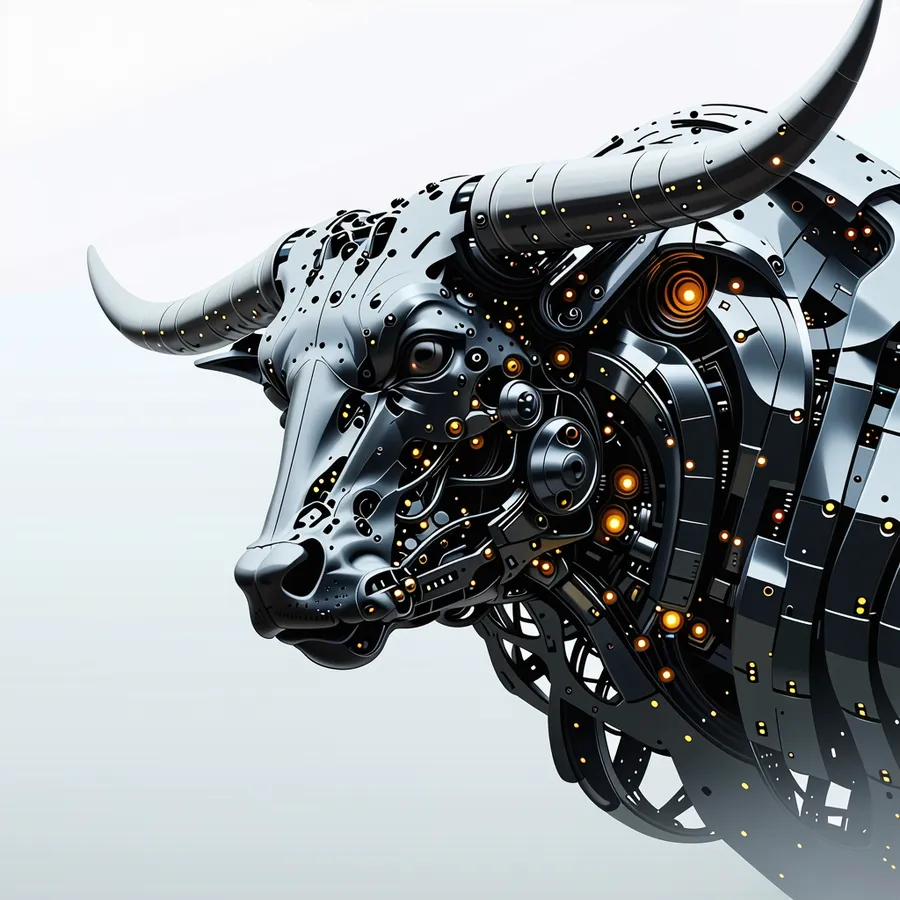

Second example is a hyperdetailed semi-ghost taurus.

flux.1-dev image: dark shot, ethereal semi-ghost taurus, biomechanical details, hyperdetailed, metal.

DALL-E 2 image: dark shot, ethereal semi-ghost taurus, biomechanical details, hyperdetailed, metal.

DALL-E 3 image: dark shot, ethereal semi-ghost taurus, biomechanical details, hyperdetailed, metal.

Stable Diffusion Large Turbo 3.5 image: dark shot, ethereal semi-ghost taurus, biomechanical details, hyperdetailed, metal.

This time, the winner isn’t quite as obvious. While I personally like the flux image the most stylistically, it didn’t adhere to the prompt quite as well this time. In contrast, all other models produced images that are more in line with the prompt, although SD totally ignored the ghost part or dark shot part of the prompt.

Exhibit C

Last comparison is a photograph of people. This is typically where AI models struggle the most, as they tend to produce images that are uncanny and really struggle with the eyes and the hair.

flux.1-dev image: Photograph of Azerbaijani man and woman in their 20s.

DALLE-2 image: Photograph of Azerbaijani man and woman in their 20s.

DALLE-3 image: Photograph of Azerbaijani man and woman in their 20s.

Stable Diffusion Large Turbo 3.5: Photograph of Azerbaijani man and woman in their 20s.

Another landslide victory for flux.1-dev. The image is the most realistic and detailed of the bunch, in fact so realistic I would probably struggle to realize it’s not a real photograph. DALL-E 2 and 3 are atrociously bad, while the Stable Diffusion image isn’t terrible but clearly very cartoonish.

Conclusion and more examples

As can be seen from the three examples, flux is head and shoulders above the competition. It’s not perfect when it comes to prompt adherence, but the images it generates are solid more often than not, which the same cannot be said for DALL-E or Stable Diffusion.

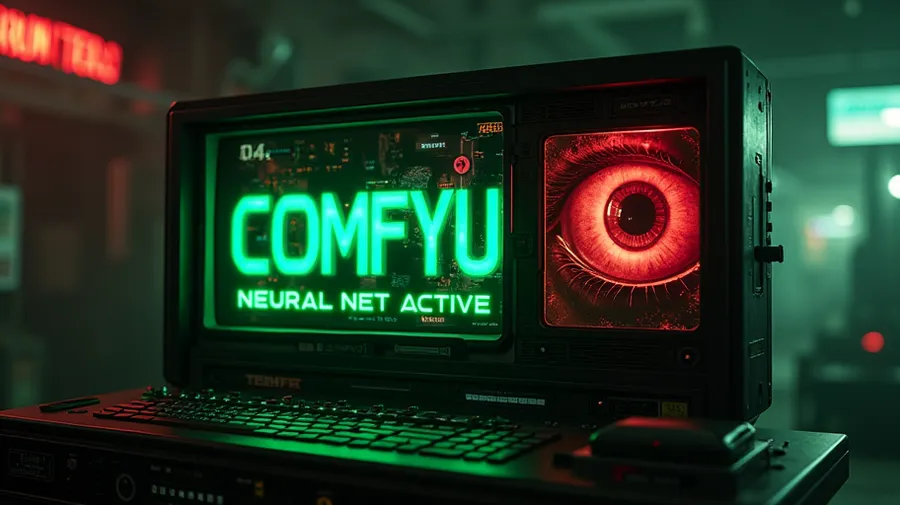

To cap off this post, here are a few more images I’ve generated with flux.1-dev:

flux.1-dev image: Retro-futuristic computer terminal with green text displaying “COMFYUI NEURAL NET ACTIVE”, red glowing Terminator eye reflection in the screen, scattered AI-generated images in the background, dark tech noir atmosphere, chrome and steel, smoke effects, neon accents, cinematic lighting, 8k —ar 16:9.

flux.1-dev image: A cyberpunk prompt engineer wearing a black Matrix-style trench coat and mirror shades, sitting in a dark tech workspace, green digital rain falling in the background, red Terminator HUD elements floating in air, multiple holographic screens showing ComfyUI interfaces, one eye subtly glowing red through glasses, dramatic cinematic lighting, volumetric fog, highly detailed, 8k, professional photography —ar 16:9

flux.1-dev image: Cyberpunk prompt engineer with neural headset and flowing dark coat on elevated platform, controlling T-800 through glowing puppet strings and command lines, robot precisely working on large oil painting showing renaissance-style scene, professional easel setup with palette and brush collection, holographic UI elements surrounding puppeteer’s workspace, streaming code transforming into artistic brushstrokes, modern art studio with classical elements, cinematic lighting, ultra detailed —ar 16:9

flux.1-dev image: Close-up portrait of Tibetan sand fox, characteristic broad flat face, triangular cheeks, piercing eyes, compact body with thick fur, natural mountain environment, high-altitude grassland setting, soft morning light, detailed fur texture, professional camera angle, ultra sharp —ar 16:9

flux.1-dev image: ram standing on a rocky hillside, overlooking a bustling valley below. The ram has a thick, curly coat and impressive curved horns. In the valley, a lively market scene unfolds, with colorful stalls and people moving about energetically. The ram stands calmly, embodying wisdom and discernment, as it gazes at the scene below. The sky above is a soft gradient of oranges and purples, suggesting the onset of evening. The image should convey a sense of calm observation amidst excitement and activity.

flux.1-dev image: Two ethereal fish circle each other in an endless dance, one silver and one gold, forming a graceful yin-yang pattern against a deep azure background. Their scales shimmer with opalescent light, and flowing ribbons of water connect them like cosmic threads. Delicate bubbles float around them, each containing tiny reflections of stars. The Pisces constellation glows softly behind them, while gentle waves of light create the impression of being underwater and in space simultaneously. The fish appear both solid and translucent, their fins trailing wisps of luminescent water as they move in their eternal circular dance.

flux.1-dev image: A hyperrealistic portrait of Scooby-Doo, portrayed with rugged features. His fur is textured and detailed, showing signs of adventure and wear. His eyes are expressive, conveying wisdom and experience. The background is softly blurred to focus attention on Scooby-Doo’s rugged appearance, highlighting his strong jawline and muscular build. Subtle lighting accentuates the rich colors and textures of his fur, creating a lifelike and captivating image.

flux.1-dev image: A hyperrealistic portrait of Scooby-Doo as a hell hound, depicted in a brutal and rough state, scowling directly at the camera with visible teeth. His fur is disheveled and matted, with an otherworldly, fiery glow emanating from beneath, showing signs of hardship and neglect. His eyes are piercing blue and hollow, reflecting a deep sense of despair and a lack of will to live. His expression is one of anger and resentment, with a worn and rugged appearance. The background is dark and infernal, with flames subtly flickering, emphasizing the raw emotion and turmoil in Scooby-Doo’s face. Subtle, dramatic lighting highlights the textures and shadows, creating a powerful, haunting, and supernatural image.

flux.1-dev image:A hyperrealistic portrait of a nuclear blast survivor, styled similarly to the iconic National Geographic Afghan Girl. The survivor is disheveled, with unkempt hair and tattered clothing, reflecting the devastation endured. His piercing blue eyes are intense and soulful, staring directly into the viewer’s soul, conveying a profound mix of trauma and resilience. His face is marked with ash and dirt, adding to the raw, gritty realism of the image. The background is softly blurred, focusing attention on the survivor’s striking features, much like the Afghan Girl portrait. Subtle, natural lighting enhances the vividness of his eyes and the textures of his skin, creating a powerful and evocative image.

flux.1-dev image: Close-up portrait of a woman illuminated by soft, warm afternoon light streaming through window blinds, creating striking shadow patterns across her face. She has a natural, glowing complexion with dewy skin and subtly highlighted cheekbones. Her expressive eyes are accented with long lashes and a hint of soft eyeshadow, while her full lips are painted in a soft peach shade. The background features muted teal walls that enhance the warmth of the scene. She wears a dark, pinstriped blazer, adding a touch of elegance. The overall aesthetic is hyperrealistic, capturing intricate details like the texture of her skin and the delicate play of light and shadow, with a color palette of warm neutrals and soft pastels.